【用户行为分析】 用wiki百科中文语料训练word2vec模型

本文地址: http://blog.csdn.net/hereiskxm/article/details/49664845

- 前言

最近在调研基于内容的用户行为分析,在过程中发现了word2vec这个很有帮助的算法。word2vec,顾名思义是将词语(word)转化为向量(vector)的的工具。产自Google,于2013年开源。在向量模型中,我们可以做基于相似度(向量距离/夹角)的运算。在模型中向量的相似度即对应词之间语义的相似度,简单来说,就是两个词在同一个语义场景出现的概率。比如, 我们向模型输入 ”java“ 和 ”程序员“,得到 0.47。如果输入 ”java“ 和 "化妆",则得到0.00014。相较即可知前者在语义上相似度远大于后者,这给我们提供了很多利用的可能性。后文会有更多word2vec的例子。

word2vec官方项目地址:

https://code.google.com/p/word2vec/

word2vec有几种语言的实现,官方项目是C语言版本的,除此外有Java,python,spark语言的实现。都可以在此页面中找到链接。本文采用了python的版本进行训练。

word2vec算法可以通过学习一系列的语料建立模型,而wiki百科是一个全面的语料来源,接下来让我们看看用wiki语料训练模型的过程。

2. 前期准备

wiki中文语料下载:https://dumps.wikimedia.org/zhwiki/latest/zhwiki-latest-pages-articles.xml.bz2 (如果需要英文材料可以把链接中的zh改为en)

安装gensim,准备python环境:http://blog.csdn.net/hereiskxm/article/details/49424799

3. 语料处理

wiki语料下载下来是xml格式的,我们把它转为txt格式。编写python脚本如下:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import logging

import os.path

import sys

from gensim.corpora import WikiCorpus

if __name__ == "__main__":

program = os.path.basename(sys.argv[0])

logger = logging.getLogger(program)

logging.basicConfig(format="%(asctime)s: %(levelname)s: %(message)s")

logging.root.setLevel(level=logging.INFO)

logger.info("running %s" % " ".join(sys.argv))

# check and process input arguments

if len(sys.argv) < 3:

print globals()["__doc__"] % locals()

sys.exit(1)

inp, outp = sys.argv[1:3]

space = " "

i = 0

output = open(outp, "w")

wiki = WikiCorpus(inp, lemmatize=False, dictionary={})

for text in wiki.get_texts():

output.write(space.join(text) + "

")

i = i + 1

if (i % 10000 == 0):

logger.info("Saved " + str(i) + " articles")

output.close()

logger.info("Finished Saved " + str(i) + " articles")

保存文件为:process_wiki.py,并在命令行执行此脚本:python process_wiki.py zhwiki-latest-pages-articles.xml.bz2 wiki.zh.txt。

2015-03-07 15:08:39,181: INFO: running process_enwiki.py zhwiki-latest-pages-articles.xml.bz2 wiki.zh.txt 2015-03-07 15:11:12,860: INFO: Saved 10000 articles 2015-03-07 15:13:25,369: INFO: Saved 20000 articles 2015-03-07 15:15:19,771: INFO: Saved 30000 articles 2015-03-07 15:16:58,424: INFO: Saved 40000 articles 2015-03-07 15:18:12,374: INFO: Saved 50000 articles 2015-03-07 15:19:03,213: INFO: Saved 60000 articles 2015-03-07 15:19:47,656: INFO: Saved 70000 articles 2015-03-07 15:20:29,135: INFO: Saved 80000 articles 2015-03-07 15:22:02,365: INFO: Saved 90000 articles 2015-03-07 15:23:40,141: INFO: Saved 100000 articles ..... 2015-03-07 19:33:16,549: INFO: Saved 3700000 articles 2015-03-07 19:33:49,493: INFO: Saved 3710000 articles 2015-03-07 19:34:23,442: INFO: Saved 3720000 articles 2015-03-07 19:34:57,984: INFO: Saved 3730000 articles 2015-03-07 19:35:31,976: INFO: Saved 3740000 articles 2015-03-07 19:36:05,790: INFO: Saved 3750000 articles 2015-03-07 19:36:32,392: INFO: finished iterating over Wikipedia corpus of 3758076 documents with 2018886604 positions (total 15271374 articles, 2075130438 positions before pruning articles shorter than 50 words) 2015-03-07 19:36:32,394: INFO: Finished Saved 3758076 articles

我的执行过程已经不在了,大概执行过程的命令行显示类似上面。本机(8G内存)跑用时约30分钟。

完成之后,会在当前目录下得到一个txt文件,内容已经是UTF-8编码的文本格式了。

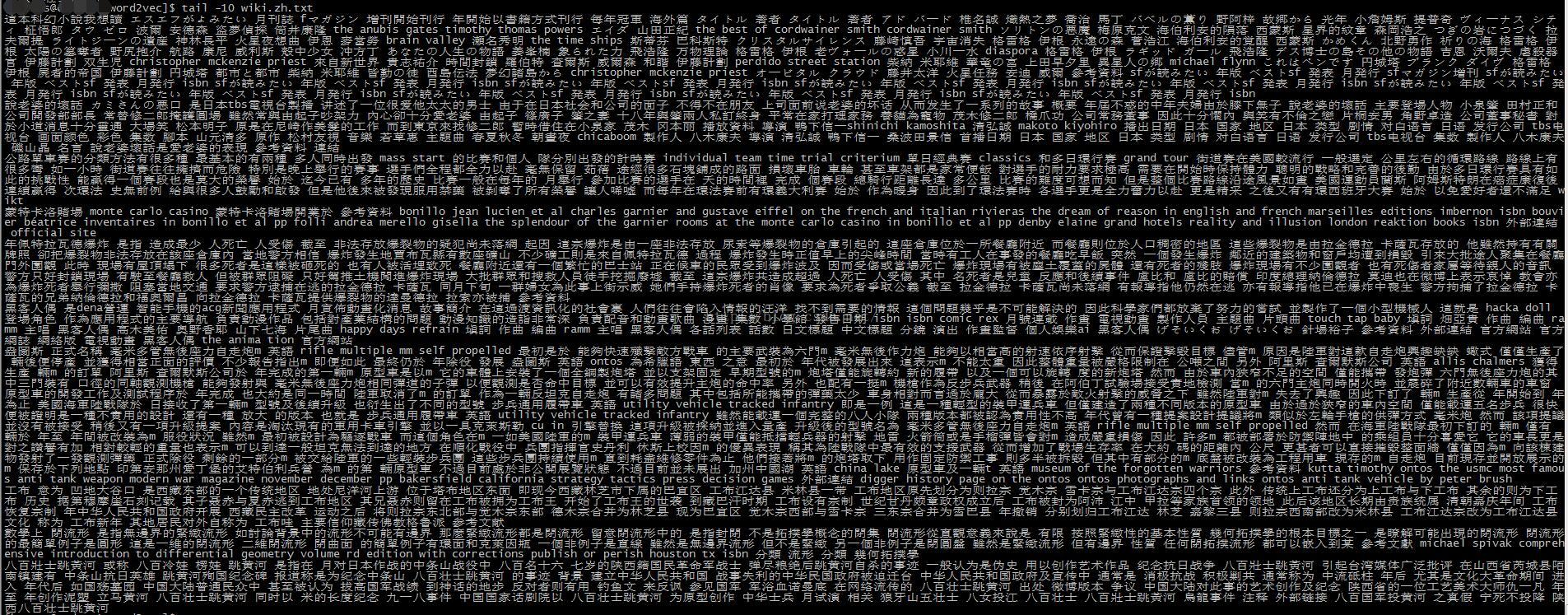

打开来看看,可以看到结构大概是一篇文章一行,每行内有多个以空格划分的短句(可右键查看图片看大图):

接下来对其进行分词,此处分词我写了一个短Java程序来做,处理流程是:繁体转简体 -> 分词(去停用词) -> 去除不需要的词 。我不能确定wiki中只有繁体还是有繁有简,所以统一转为简体处理,否则一个词的繁体和简体两种形式会被word2vec算法当成两个不同的词处理。

分词过程需要注意,我们除了去停用词外,还要选择有人名识别的分词算法。MMSeg是我最初的选择,但后来放弃了,就是因为其对专有名词、品牌或人名是不识别成一个词的,它将其每一个字独立成词。这种形式用在搜索引擎里是合适的,但在此处明显不当,会让我们丢失很多信息。最后选用word分词算法,效果还算满意。

至于去除不需要的词这里就见仁见智了,我选择去除了纯数字和数字加中文的词,因为我不需要这些,对我来说它们是混淆项。

经过分词之后的文件变成了这样:

我保留了一篇文章一行的形式。检查一下专有名词和人名,基本按正常情况分词了。

4. 模型训练

代码如下:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import logging

import os.path

import sys

import multiprocessing

from gensim.corpora import WikiCorpus

from gensim.models import Word2Vec

from gensim.models.word2vec import LineSentence

if __name__ == "__main__":

program = os.path.basename(sys.argv[0])

logger = logging.getLogger(program)

logging.basicConfig(format="%(asctime)s: %(levelname)s: %(message)s")

logging.root.setLevel(level=logging.INFO)

logger.info("running %s" % " ".join(sys.argv))

# check and process input arguments

if len(sys.argv) < 4:

print globals()["__doc__"] % locals()

sys.exit(1)

inp, outp1, outp2 = sys.argv[1:4]

model = Word2Vec(LineSentence(inp), size=400, window=5, min_count=5,

workers=multiprocessing.cpu_count())

# trim unneeded model memory = use(much) less RAM

#model.init_sims(replace=True)

model.save(outp1)

model.save_word2vec_format(outp2, binary=False)

执行 python train_word2vec_model.py wiki.zh.txt wiki.zh.text.model wiki.zh.text.vector

执行过程大致如下:

2015-03-11 18:50:02,586: INFO: running train_word2vec_model.py wiki.zh.text.jian.seg.utf-8 wiki.zh.text.model wiki.zh.text.vector 2015-03-11 18:50:02,592: INFO: collecting all words and their counts 2015-03-11 18:50:02,592: INFO: PROGRESS: at sentence #0, processed 0 words and 0 word types 2015-03-11 18:50:12,476: INFO: PROGRESS: at sentence #10000, processed 12914562 words and 254662 word types 2015-03-11 18:50:20,215: INFO: PROGRESS: at sentence #20000, processed 22308801 words and 373573 word types 2015-03-11 18:50:28,448: INFO: PROGRESS: at sentence #30000, processed 30724902 words and 460837 word types ... 2015-03-11 18:52:03,498: INFO: PROGRESS: at sentence #210000, processed 143804601 words and 1483608 word types 2015-03-11 18:52:07,772: INFO: PROGRESS: at sentence #220000, processed 149352283 words and 1521199 word types 2015-03-11 18:52:11,639: INFO: PROGRESS: at sentence #230000, processed 154741839 words and 1563584 word types 2015-03-11 18:52:12,746: INFO: collected 1575172 word types from a corpus of 156430908 words and 232894 sentences 2015-03-11 18:52:13,672: INFO: total 278291 word types after removing those with count<5 2015-03-11 18:52:13,673: INFO: constructing a huffman tree from 278291 words 2015-03-11 18:52:29,323: INFO: built huffman tree with maximum node depth 25 2015-03-11 18:52:29,683: INFO: resetting layer weights 2015-03-11 18:52:38,805: INFO: training model with 4 workers on 278291 vocabulary and 400 features, using "skipgram"=1 "hierarchical softmax"=1 "subsample"=0 and "negative sampling"=0 2015-03-11 18:52:49,504: INFO: PROGRESS: at 0.10% words, alpha 0.02500, 15008 words/s 2015-03-11 18:52:51,935: INFO: PROGRESS: at 0.38% words, alpha 0.02500, 44434 words/s 2015-03-11 18:52:54,779: INFO: PROGRESS: at 0.56% words, alpha 0.02500, 53965 words/s 2015-03-11 18:52:57,240: INFO: PROGRESS: at 0.62% words, alpha 0.02491, 52116 words/s 2015-03-11 18:52:58,823: INFO: PROGRESS: at 0.72% words, alpha 0.02494, 55804 words/s 2015-03-11 18:53:03,649: INFO: PROGRESS: at 0.94% words, alpha 0.02486, 58277 words/s 2015-03-11 18:53:07,357: INFO: PROGRESS: at 1.03% words, alpha 0.02479, 56036 words/s ...... 2015-03-11 19:22:09,002: INFO: PROGRESS: at 98.38% words, alpha 0.00044, 85936 words/s 2015-03-11 19:22:10,321: INFO: PROGRESS: at 98.50% words, alpha 0.00044, 85971 words/s 2015-03-11 19:22:11,934: INFO: PROGRESS: at 98.55% words, alpha 0.00039, 85940 words/s 2015-03-11 19:22:13,384: INFO: PROGRESS: at 98.65% words, alpha 0.00036, 85960 words/s 2015-03-11 19:22:13,883: INFO: training on 152625573 words took 1775.1s, 85982 words/s 2015-03-11 19:22:13,883: INFO: saving Word2Vec object under wiki.zh.text.model, separately None 2015-03-11 19:22:13,884: INFO: not storing attribute syn0norm 2015-03-11 19:22:13,884: INFO: storing numpy array "syn0" to wiki.zh.text.model.syn0.npy 2015-03-11 19:22:20,797: INFO: storing numpy array "syn1" to wiki.zh.text.model.syn1.npy 2015-03-11 19:22:40,667: INFO: storing 278291x400 projection weights into wiki.zh.text.vector建模过程耗时30分钟左右。

我们看看wiki.zh.text.vector的内容:

可以看出,一行是一个词的向量,后面的各个数字是词向量每个维度的值。

测试一下我们的模型:

Python 2.7.10 |Anaconda 2.3.0 (64-bit)| (default, May 28 2015, 17:02:03)

[GCC 4.4.7 20120313 (Red Hat 4.4.7-1)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

Anaconda is brought to you by Continuum Analytics.

Please check out: http://continuum.io/thanks and https://binstar.org

>>> import gensim 1、引入gensim

WARNING (theano.configdefaults): g++ not detected ! Theano will be unable to execute optimized C-implementations (for both CPU and GPU) and will default to Python implementations. Performance will be severely degraded. To remove this warning, set Theano flags cxx to an empty string.

>>> model = gensim.models.Word2Vec.load("wiki.zh.text.model") 2、加载模型

>>> model.most_similar(u"足球") 3、查看和足球最相关的词

[(u"u7532u7ea7", 0.72623610496521), (u"u8054u8d5b", 0.6967484951019287), (u"u4e59u7ea7", 0.6815086603164673), (u"u5973u5b50u8db3u7403", 0.6662559509277344), (u"u8377u5170u8db3u7403", 0.6462257504463196), (u"u8db3u7403u961f", 0.6444228887557983), (u"u5fb7u56fdu8db3u7403", 0.6352497935295105), (u"u8db3u7403u534fu4f1a", 0.6313000917434692), (u"u6bd4u5229u65f6u676f", 0.6311478614807129), (u"u4ff1u4e50u90e8", 0.6295265555381775)]

结果是:

[(u"甲级", 0.72623610496521), (u"联赛", 0.6967484951019287), (u"乙级", 0.6815086603164673), (u"女子足球", 0.6662559509277344), (u"荷兰足球", 0.6462257504463196), (u"足球队", 0.6444228887557983), (u"德国足球", 0.6352497935295105), (u"足球协会", 0.6313000917434692), (u"比利时杯", 0.6311478614807129), (u"俱乐部", 0.6295265555381775)]

其他例子:

兔子

[(u"一只", 0.5688103437423706), (u"小狗", 0.5381371974945068), (u"来点", 0.5336571931838989), (u"猫", 0.5334546566009521), (u"老鼠", 0.5177739858627319), (u"青蛙", 0.4972209334373474), (u"狗", 0.4893607497215271), (u"狐狸", 0.48909318447113037), (u"猫咪", 0.47951626777648926), (u"兔", 0.4779919385910034)]

股票

[(u"交易所", 0.7432450652122498), (u"证券", 0.7410451769828796), (u"ipo", 0.7379931211471558), (u"股价", 0.7343258857727051), (u"期货", 0.7162749767303467), (u"每股", 0.700008749961853), (u"kospi", 0.6965476274490356), (u"换股", 0.6927754282951355), (u"创业板", 0.6897038221359253),

(u"收盘报", 0.6847120523452759)]

音乐

[(u"流行", 0.6373772621154785), (u"录影带", 0.6004574298858643), (u"音乐演奏", 0.5833497047424316), (u"starships", 0.5774279832839966), (u"乐风", 0.5701465606689453), (u"流行摇滚", 0.5658928155899048), (u"流行乐", 0.5657000541687012), (u"雷鬼音乐", 0.5649317502975464), (u"后摇滚", 0.5644392371177673), (u"节目音乐", 0.5602964162826538)]

电影

[(u"悬疑片", 0.7044901847839355), (u"本片", 0.6980072259902954), (u"喜剧片", 0.6958730220794678), (u"影片", 0.6780649423599243), (u"长片", 0.6747604608535767), (u"动作片", 0.6695533990859985), (u"该片", 0.6645846366882324), (u"科幻片", 0.6598016023635864), (u"爱情片", 0.6590006947517395),

(u"此片", 0.6568557024002075)]

招标

[(u"批出", 0.6294339895248413), (u"工程招标", 0.6275389194488525), (u"标书", 0.613800048828125), (u"公开招标", 0.5919119715690613), (u"开标", 0.5917631387710571), (u"流标", 0.5791728496551514), (u"决标", 0.5737971067428589), (u"施工", 0.5641534328460693), (u"中标者", 0.5528303980827332), (u"建设期", 0.5518919825553894)]

女儿

[(u"妻子", 0.8230685591697693), (u"儿子", 0.8067737817764282), (u"丈夫", 0.7952680587768555), (u"母亲", 0.7670352458953857), (u"小女儿", 0.7492039203643799), (u"亲生", 0.7171255350112915), (u"生下", 0.7126915454864502), (u"嫁给", 0.7088421583175659), (u"孩子", 0.703400731086731), (u"弟弟", 0.7023398876190186)]

比较两个词的相似度:

>>>

KeyboardInterrupt

>>> model.similarity(u"足球",u"运动")

0.1059533903509985

>>> model.similarity(u"足球",u"球类")

0.26147174351997882

>>> model.similarity(u"足球",u"篮球")

0.56019543381248371

>>> model.similarity(u"IT",u"程序员")

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/hermes/anaconda/lib/python2.7/site-packages/gensim/models/word2vec.py", line 1279, in similarity

return dot(matutils.unitvec(self[w1]), matutils.unitvec(self[w2]))

File "/home/hermes/anaconda/lib/python2.7/site-packages/gensim/models/word2vec.py", line 1259, in __getitem__

return self.syn0[self.vocab[words].index]

KeyError: u"IT"分析一下:

“足球”和“运动”的相似度只有0.1,“足球”和“球类”的相似度是0.26,可以看出上下级包含关系的词在相似性上不突出,但仍然能看出越细的类别体现出来的相似度越好。“足球”和“篮球”的相似度有0.56,可以看出平级的词之间相似度较好。想计算“IT”和”程序员“之间的相似度,发现报错了,原来是IT这个词在模型中不存在,恐怕是在去停用词时当成it被过滤掉了。

>>> model.similarity("java",u"程序员")

0.47254847166534308

>>> model.similarity("java","asp")

0.39323879449275156

>>> model.similarity("java","cpp")

0.50034941548721434

>>> model.similarity("java",u"接口")

0.4277060809963118

>>> model.similarity("java",u"运动")

0.017056843004573947

>>> model.similarity("java",u"化妆")

0.00014340386119819637

>>> model.similarity(u"眼线",u"眼影")

0.46869071954910552

“java”和“运动”,“java”和“化妆”之间的相似度极小,可以看出话题相差越远的词在相似度上的确有所体现。模型训练就到这里结束了,可以看出还有很多可以优化的地方,这和语料库的规模有关,还和分词器的效果有关等等,不过这个实验暂且就到这里了。对于word2vec,我们更关注的是word2vec在具体的应用任务中的效果,在我们项目中有意愿用其进行聚类结果的自动标签化,如果还有一些其他应用,也欢迎大家一起探讨。如果想要这个模型,可以通过评论或者私信联系我。

最后补充

a) 关于很多同学私信我的java版本similarity方法实现:

/**

* 求近似程度(点乘向量)

*

* @return

*/

public Float similarity(String word0, String word1) {

float[] wv0 = getWordVector(word0);

float[] wv1 = getWordVector(word1);

if (wv1 == null || wv0 == null) {

return null;

}

float score = 0;

for (int i = 0; i < size; i++) {

score += wv1[i] * wv0[i];

}

return score;

}b)关于大家最关心的训练结果的分享:

word2vec - wiki百科gensim训练模型

密码:j15o

参考资料: 中英文维基百科语料上的Word2Vec实验